Politics

Big Data and DevOps – Winning Combination for Global Enterprises

Published

2 years agoon

By

Drew Simpson

Technology advances rapidly, and almost all industry sectors tend to embrace changes to survive amid this troubled time. Emerging technologies like AI, big data, and ML can prepare enterprises for the future while ensuring their growth. However, entrepreneurs must combine technologies to achieve their long-term objectives while effectively addressing intensifying competition.

“Big Data” has become a buzzword in the corporate world. Big Data projects lead the day by offering actionable insights from available data. However, there always are ways to increase their efficiency further. One of them is combining Big Data with DevOps technology. This article will dig deep into the Big Data and DevOps combination. But, before moving ahead, let’s briefly understand both these terms.

Big Data- Brief Introduction

Big data refers to large and complex data sets which are collected from a variety of sources. Their volume and complexity are massive; therefore, traditional data processing software cannot manage them. These data sets are handy for entrepreneurs to resolve various business tasks and make informed decisions in real-time. Standard data cannot serve this purpose effectively.

Extensive data management involves various processes, including obtaining, storing, sharing, analyzing, digesting, visualizing, transforming, and testing corporate data to provide the desired business value. It also contributes to streamlining processes by bringing automation.

Furthermore, as enterprises experience huge pressure for faster delivery in this competitive market, Big Data can assist them with actionable insights. But, when it comes to providing all this with maximum efficiency, DevOps brings the right tools and practices.

Exciting Stats for Big Data

- Experts indicate that over 463 exabytes of data will be created every day by 2025, which is equivalent to around 212,765,957 DVDs

- Poor quality of data can cost the US economy as much as USD 3.1 trillion annually.

- The Big Data market is expected to reach a value of around USD 103 billion by 2027

- Over 97 percent of organizations say they are investing in Big Data and AI

- Around 95 percent of companies say their inability to understand and manage unstructured data holds them back

After knowing the importance of Big Data, let us understand the DevOps concept.

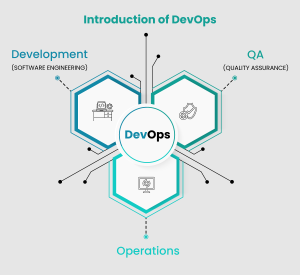

Introduction of DevOps

If we define DevOps, it is a methodology, culture, and set of practices that aim to facilitate and improve communication and collaboration between both development and operations teams. It is mainly focused on automating and streamlining various processes within the development lifecycle of the respective project.

Essential pillars of DevOps are shorter development cycles, increased deployment frequency, rapid releases, parallel work of different experts, and regular customer feedback are significant pillars of DevOps. Today, this concept has gained ground because of its benefits for enterprises.

It significantly increases the speed, quality, and reliability of the software. Most software projects can take advantage of the DevOps concept in agile methodology.

Key Reasons Why DevOps Gaining Widespread Acceptance

Lack of communication between developers and the operations team can slow development. DevOps is developed to overcome this drawback by providing better collaboration among members of both teams, which results in faster delivery. It also offers uninterrupted software delivery by minimizing and resolving complex problems quicker and more effectively.

Most organizations have adopted DevOps to enhance user satisfaction and deliver a high-quality product within a short time while improving overall efficiency and productivity. DevOps structures and strengthens the software delivery lifecycle. It started gaining popularity in the year 2016 as more and more organizations began moving to DevOps usage.

Corporate clients who adopted advanced technologies like Cloud, Big Data, etc., are demanding companies to deliver high software-driven capabilities. A recent survey proved that 86% of organizations believe that continuous software delivery is crucial to their business. Here, DevOps can lend a helping hand to ensure the timely delivery of high-quality software.

Key DevOps Statistics

- The market share of DevOps is expected to increase by over USD 6 billion by 2022

- 58 percent of organizations have witnessed better performance and improved ROI after adopting DevOps

- 68 percent of companies have seen improved customer experience after deploying DevOps

- 47 percent of companies have reduced the TTM (Time to Market) of software and service deployment

DevOps offers benefits like higher reliability, more security, and enhanced scalability besides a speedy development cycle and the capability of delivering faster updates. It also improves ownership and accountability across various teams. DevOps practices have two inherent aspects- CI (Continuous Integration) and CD (Continuous Delivery). They are related to each other and contribute to increasing productivity.

- Continuous Integration (CI) is the practice of merging the code changes from multiple developers into the central repository several times a day.

- Continuous Delivery (CD) is the practice of software code being created, tested, and continuously deployed to the production environment.

Why Big Data needs DevOps

At times, Big Data projects can be challenging in terms of:

- handling the massive amount of data

- delivering the task faster to keep up with the growing competition or due to the pressure from the stakeholders

- responding quickly to changes

The traditional approach to meet these challenges, unlike DevOps, is insufficient. Traditionally, different teams and members work in isolation. This practice creates silos and brings a lack of collaboration. For example, data architects, analysts, admins, and many other experts work on their part of the job, which ultimately slows down the delivery.

DevOps, on the other hand, according to the pillars mentioned above, brings all participants of all stages of the software delivery pipeline together. It removes barriers and reduces silos between different roles to make your Big Data team cross-functional with ease. In addition, you can experience a considerable increase in operational efficiency, resulting in a better-shared goal vision.

Simply put, DevOps tools for Big Data result in the higher efficiency and productivity of Big Data processing. DevOps for Big Data uses almost the same tools as the traditional DevOps environments, like bug tracking, source code management, deployment tools, and continuous integration.

Though the Big Data and DevOps combination offers many benefits to enterprises, it has its challenges, and software companies must address them while combining Big Data and DevOps.

Challenges of Big Data and DevOps Combination

Suppose you have finally decided to integrate DevOps with your Big Data project. In that case, you must understand the different types of challenges that you might experience during the process.

- The operations team of an organization must be aware of the techniques used to implement analytics models, along with in-depth knowledge of big data platforms. And the analytics experts must learn some advanced stuff, as they work closely with different social engineers.

- Additional resources and cloud computing technology will be required if you want to operate Big Data DevOps at maximum efficiency, as these services help IT departments concentrate more on enhancing business values instead of focusing on fixing issues related to hardware, operating systems, and some other operations.

- Though DevOps build strong communication between developers and operation professionals, dealing with some communication challenges is difficult. Also, testing the function of analytic models should be meticulous and faster in production-grade environments.

Benefits of Big Data and DevOps Combination

DevOps is not associated with data analytics, so employing data specialists can be an added advantage for organizations who want to adopt DevOps with Big Data. It can help them to make the Big Data operations more powerful and efficient in combination with Dev Ops. Integration of Big Data and DevOps results in the following benefits for organizations.

- Effective Software Updates

In general, the software combines with data for sure. So, if you want to update your software, you must know your application’s types of data sources. This can be understood by interacting with your data experts while integrating DevOps and Big Data.

Mainly, errors increase when organizations face problems handling data while writing and testing the software. Finding and avoiding those errors remains the top priority in the software delivery pipeline to save time and effort. Data-related errors can be fixed in an application with strong collaboration between DevOps and Big Data experts.

Non-data experts cannot understand the software that runs with Big Data because of the tremendous verification in the types and range of data. Here, data experts can help DevOps professionals to gain knowledge about the kinds of data and challenges they need to deal with to ensure optimum outcomes. It is fair to mention that the DevOps team working in collaboration with the Big Data team results in applications whose performance in the real world is the same as that in the development environment.

Time-consuming processes, like data migration or translation, might slow your project down. But combining DevOps and Big Data helps streamline operations and improve data quality. As a result, executives can focus on other productive and creative tasks.

Like continuous integration (CI), you can benefit from constant analytics by combining DevOps and Big Data. So it is because the combination can streamline the data analysis processes and automate them using algorithms.

When the Big Data software is deployed to production, it’s time to gather real-time and accurate feedback to find its strengths and weaknesses. Again, the close collaboration of DevOps executives and data scientists, thanks to the combination of DevOps and Big Data, can remain handy in this process.

Critical Applications of DevOps in Big Data

Effective Planning for Software Updates

A developer has to get an insight into the data types that will be useful in developing an enterprise-grade application or software. It is also necessary to understand where the data will be used in the application and to what extent.

You want to give this information to your dev early and ensure that your developer works with a data expert.

Your data experts will know the correct code and keep your dev on the right path to designing or updating your company software. You want to maintain the integrity of your system and have everything run smoothly for your updates.

Low Chances of Error

When software is developed, developers tend to test it rigorously, due to which the problem related to data causes constant errors. Moreover, this error rate keeps increasing as the complexity of the software increases in line with the rise in the data. Here, the collaboration of DevOps and Big Data comes into the game.

The Data scientists and developers identify those errors in the early stages, saving both teams time and effort. Moreover, it makes it easier to find other errors in the application.

Consistent Environment

The DevOps philosophy states that a development-friendly environment should resemble a real-world setting, but it is impossible whenever big data comes into play.

A development-friendly environment is difficult to create when a developer has to involve big data in developing software that consists of many complex data sets and many types of data.

You’ll want your company developers to be well aware of all challenges that will be facing your devs, and your data expert can provide the answers. You can retain a data expert or hire a contract data expert to help your devs produce enterprise-level software.

Concluding Lines

Though the DevOps concept has grown and is mature enough to deliver software and services faster, it is still not considered a critical approach by many worldwide enterprises. Large-scale enterprises are still following the old approaches because of the false or improper belief that the transition to DevOps might fail.

But the move to DevOps can help businesses deliver high-quality products quickly, and companies can provide better results in the long run after combining Big Data with DevOps.

Inner Image and Featured Image Credit: Provided by the Author; Thank you!

You may like

-

Make no mistake—AI is owned by Big Tech

-

The Download: Big Tech’s AI stranglehold, and gene-editing treatments

-

The first CRISPR cure might kickstart the next big patent battle

-

Sustainability starts with the data center

-

Meta is giving researchers more access to Facebook and Instagram data

-

2023 global cloud ecosystem

Politics

Fintech Kennek raises $12.5M seed round to digitize lending

Published

8 months agoon

10/11/2023By

Drew Simpson

London-based fintech startup Kennek has raised $12.5 million in seed funding to expand its lending operating system.

According to an Oct. 10 tech.eu report, the round was led by HV Capital and included participation from Dutch Founders Fund, AlbionVC, FFVC, Plug & Play Ventures, and Syndicate One. Kennek offers software-as-a-service tools to help non-bank lenders streamline their operations using open banking, open finance, and payments.

The platform aims to automate time-consuming manual tasks and consolidate fragmented data to simplify lending. Xavier De Pauw, founder of Kennek said:

“Until kennek, lenders had to devote countless hours to menial operational tasks and deal with jumbled and hard-coded data – which makes every other part of lending a headache. As former lenders ourselves, we lived and breathed these frustrations, and built kennek to make them a thing of the past.”

The company said the latest funding round was oversubscribed and closed quickly despite the challenging fundraising environment. The new capital will be used to expand Kennek’s engineering team and strengthen its market position in the UK while exploring expansion into other European markets. Barbod Namini, Partner at lead investor HV Capital, commented on the investment:

“Kennek has developed an ambitious and genuinely unique proposition which we think can be the foundation of the entire alternative lending space. […] It is a complicated market and a solution that brings together all information and stakeholders onto a single platform is highly compelling for both lenders & the ecosystem as a whole.”

The fintech lending space has grown rapidly in recent years, but many lenders still rely on legacy systems and manual processes that limit efficiency and scalability. Kennek aims to leverage open banking and data integration to provide lenders with a more streamlined, automated lending experience.

The seed funding will allow the London-based startup to continue developing its platform and expanding its team to meet demand from non-bank lenders looking to digitize operations. Kennek’s focus on the UK and Europe also comes amid rising adoption of open banking and open finance in the regions.

Featured Image Credit: Photo from Kennek.io; Thank you!

Radek Zielinski

Radek Zielinski is an experienced technology and financial journalist with a passion for cybersecurity and futurology.

Politics

Fortune 500’s race for generative AI breakthroughs

Published

8 months agoon

10/11/2023By

Drew Simpson

As excitement around generative AI grows, Fortune 500 companies, including Goldman Sachs, are carefully examining the possible applications of this technology. A recent survey of U.S. executives indicated that 60% believe generative AI will substantially impact their businesses in the long term. However, they anticipate a one to two-year timeframe before implementing their initial solutions. This optimism stems from the potential of generative AI to revolutionize various aspects of businesses, from enhancing customer experiences to optimizing internal processes. In the short term, companies will likely focus on pilot projects and experimentation, gradually integrating generative AI into their operations as they witness its positive influence on efficiency and profitability.

Goldman Sachs’ Cautious Approach to Implementing Generative AI

In a recent interview, Goldman Sachs CIO Marco Argenti revealed that the firm has not yet implemented any generative AI use cases. Instead, the company focuses on experimentation and setting high standards before adopting the technology. Argenti recognized the desire for outcomes in areas like developer and operational efficiency but emphasized ensuring precision before putting experimental AI use cases into production.

According to Argenti, striking the right balance between driving innovation and maintaining accuracy is crucial for successfully integrating generative AI within the firm. Goldman Sachs intends to continue exploring this emerging technology’s potential benefits and applications while diligently assessing risks to ensure it meets the company’s stringent quality standards.

One possible application for Goldman Sachs is in software development, where the company has observed a 20-40% productivity increase during its trials. The goal is for 1,000 developers to utilize generative AI tools by year’s end. However, Argenti emphasized that a well-defined expectation of return on investment is necessary before fully integrating generative AI into production.

To achieve this, the company plans to implement a systematic and strategic approach to adopting generative AI, ensuring that it complements and enhances the skills of its developers. Additionally, Goldman Sachs intends to evaluate the long-term impact of generative AI on their software development processes and the overall quality of the applications being developed.

Goldman Sachs’ approach to AI implementation goes beyond merely executing models. The firm has created a platform encompassing technical, legal, and compliance assessments to filter out improper content and keep track of all interactions. This comprehensive system ensures seamless integration of artificial intelligence in operations while adhering to regulatory standards and maintaining client confidentiality. Moreover, the platform continuously improves and adapts its algorithms, allowing Goldman Sachs to stay at the forefront of technology and offer its clients the most efficient and secure services.

Featured Image Credit: Photo by Google DeepMind; Pexels; Thank you!

Deanna Ritchie

Managing Editor at ReadWrite

Deanna is the Managing Editor at ReadWrite. Previously she worked as the Editor in Chief for Startup Grind and has over 20+ years of experience in content management and content development.

Politics

UK seizes web3 opportunity simplifying crypto regulations

Published

8 months agoon

10/10/2023By

Drew Simpson

As Web3 companies increasingly consider leaving the United States due to regulatory ambiguity, the United Kingdom must simplify its cryptocurrency regulations to attract these businesses. The conservative think tank Policy Exchange recently released a report detailing ten suggestions for improving Web3 regulation in the country. Among the recommendations are reducing liability for token holders in decentralized autonomous organizations (DAOs) and encouraging the Financial Conduct Authority (FCA) to adopt alternative Know Your Customer (KYC) methodologies, such as digital identities and blockchain analytics tools. These suggestions aim to position the UK as a hub for Web3 innovation and attract blockchain-based businesses looking for a more conducive regulatory environment.

Streamlining Cryptocurrency Regulations for Innovation

To make it easier for emerging Web3 companies to navigate existing legal frameworks and contribute to the UK’s digital economy growth, the government must streamline cryptocurrency regulations and adopt forward-looking approaches. By making the regulatory landscape clear and straightforward, the UK can create an environment that fosters innovation, growth, and competitiveness in the global fintech industry.

The Policy Exchange report also recommends not weakening self-hosted wallets or treating proof-of-stake (PoS) services as financial services. This approach aims to protect the fundamental principles of decentralization and user autonomy while strongly emphasizing security and regulatory compliance. By doing so, the UK can nurture an environment that encourages innovation and the continued growth of blockchain technology.

Despite recent strict measures by UK authorities, such as His Majesty’s Treasury and the FCA, toward the digital assets sector, the proposed changes in the Policy Exchange report strive to make the UK a more attractive location for Web3 enterprises. By adopting these suggestions, the UK can demonstrate its commitment to fostering innovation in the rapidly evolving blockchain and cryptocurrency industries while ensuring a robust and transparent regulatory environment.

The ongoing uncertainty surrounding cryptocurrency regulations in various countries has prompted Web3 companies to explore alternative jurisdictions with more precise legal frameworks. As the United States grapples with regulatory ambiguity, the United Kingdom can position itself as a hub for Web3 innovation by simplifying and streamlining its cryptocurrency regulations.

Featured Image Credit: Photo by Jonathan Borba; Pexels; Thank you!

Deanna Ritchie

Managing Editor at ReadWrite

Deanna is the Managing Editor at ReadWrite. Previously she worked as the Editor in Chief for Startup Grind and has over 20+ years of experience in content management and content development.