Tech

Flexibility is key when navigating the future of 6G

Published

2 years agoon

By

Drew Simpson

The differences between 5G and 6G are not just about what collection of bandwidths will make up 6G in the future and how users will connect to the network, but also about the intelligence built into the network and devices. “The collection of networks that will create the fabric of 6G must work differently for an augmented reality (AR) headset than for an e-mail client on a mobile device,” says Shahriar Shahramian, a research lead with Nokia Bell Laboratories. “Communications providers need to solve a plethora of technical challenges to make a variety of networks based on different technologies work seamlessly,” he says. Devices will have to jump between different frequencies, adjust data rates, and adapt to the needs of the specific application, which could be running locally, on the edge of the cloud, or on a public service.

“One of the complexities of 6G will be, how do we bring the different wireless technologies together so they can hand off to each other, and work together really well, without the end user even knowing about it,” Shahramian says. “That handoff is the difficult part.”

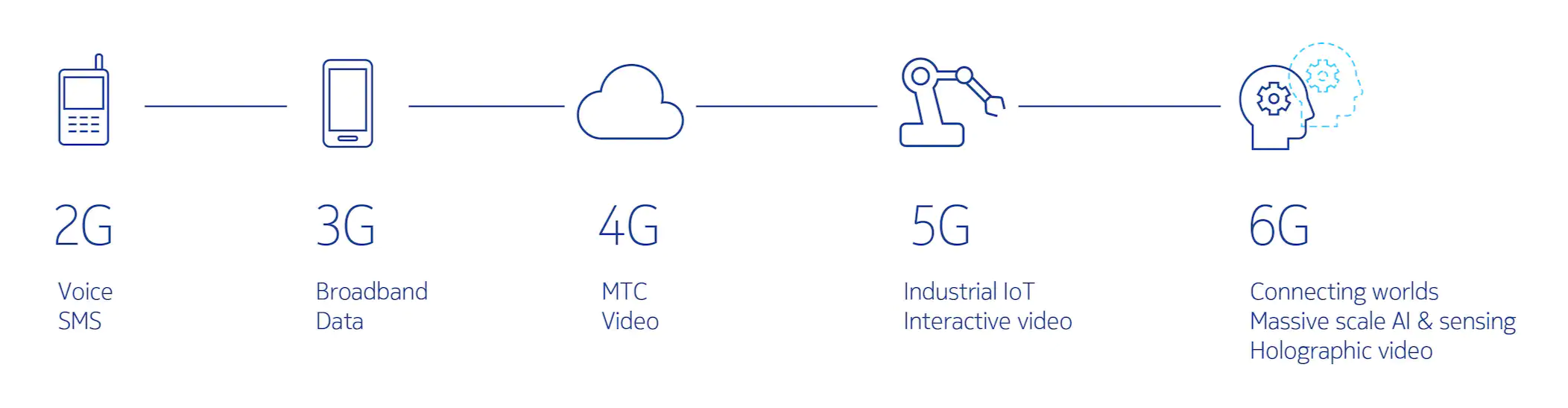

Although the current 5G network allows consumers to experience more seamless handoffs as devices move through different networks—delivering higher bandwidth and lower latency—6G will also usher in a self-aware network capable of supporting and facilitating emerging technologies that are struggling for a foothold today—virtual reality and augmented reality technologies, for example, and self-driving cars. Artificial intelligence and machine learning technology, which will be integrated into 5G as that standard evolves into 5G-Advanced, will be architected into 6G from the beginning to simplify technical tasks, such as optimizing radio signals and efficiently scheduling data traffic.

“Eventually these [technologies] could give radios the ability to learn from one other and their environments,” two Nokia researchers wrote in a post on the future of AI and ML in communications networks. “Rather than engineers telling … nodes of the network how they can communicate, those nodes could determine for themselves—choosing from millions of possible configurations—the best possible to way to communicate.”

Testing technology that doesn’t yet exist

Although this technology is still nascent, it is complex, so it’s clear that testing will play a critical role in the process. “The companies creating the testbeds for 6G must contend with the simple fact that 6G is an aspirational goal, and not yet a real-world specification,” says Jue. He continues, “The network complexity needed to fulfill the 6G vision will require iterative and comprehensive testing of all aspects of the ecosystem; but because 6G is a nascent network concept, the tools and technology to get there need to be adaptable and flexible.”

Even determining which bandwidths will be used and for what application will require a great deal of research. Second- and third-generation cellular networks used low- and mid-ranged wireless bands, with frequencies up to 2.6GHz. The next generation, 4G, extended that to 6Ghz, while the current technology, 5G, goes even further, adding so-called “mmWave” (millimeter wave) up to 71GHz.

To power the necessary bandwidth requirements of 6G, Nokia and Keysight are partnering to investigate the sub-terahertz spectrum for communication, which raises new technical issues. Typically, the higher the frequency of the cellular spectrum, the wider the available contiguous bandwidths, and hence the greater the data rate; but this comes at the cost of decreased range for a particular strength of signal. Low-power wi-fi networks using the 2.6Ghz and 5Ghz bands, for example, have a range in tens of meters, but cellular networks using 800Mhz and 1.9Ghz, have ranges in kilometers. The addition of 24-71GHz in 5G means that associated cells are even smaller (tens to hundreds of meters). And for bands above 100GHz, the challenges are even more significant.

“That will have to change,” says Jue. “One of the new key disruptors for 6G could be the move from the millimeter bands used in 5G, up to the sub-terahertz bands, which are relatively unexplored for wireless communication,” he says. “Those bands have the potential to offer broad swaths of spectrum that could be used for high data-throughput applications, but they present a lot of unknowns as well.”

Adding sub-terahertz bands to the toolbox of wireless communications devices could open up massive networks of sensing devices, high-fidelity augmented reality, and locally networked vehicles, if technology companies can overcome the challenges.

In addition to different spectrum bands, current ideas for the future 6G network will have to make use of new network architectures and better methods of security and reliability. In addition, the devices will need extra sensors and processing capabilities to adapt to network conditions and optimize communications. To do all of this, 6G will require a foundation of artificial intelligence and machine learning to manage the complexities and interactions between every part of the system.

“Every time you introduce a new wireless technology, every time you bring in new spectrum, you make your problem exponentially harder,” Nokia’s Shahramian says.

Nokia expects to start rolling out 6G technology before 2030. Because the definition of 6G remains fluid, development and testing platforms need to support a diversity of devices and applications, and they must accommodate a wide variety of use cases. Moreover, today’s technology may not even support the requirements necessary to test potential 6G applications, requiring companies like Keysight to create new testbed platforms and adapt to changing requirements.

Simulation technology being developed and used today, such as digital twins, will be used to create adaptable solutions. The technology allows real-world data from physical prototypes to be integrated back into the simulation, resulting in future designs that work better in the real world.

“However, while real physical data is needed to create accurate simulations, digital twins would allow more agility for companies developing the technology,” says Keysight’s Jue.

Simulation helps avoid many of the interative, and time-consuming, design steps that can slow down development that relies on successive physical prototypes.

“Really, kind of the key here, is a high degree of flexibility, and helping customers to be able to start doing their research and their testing, while also offering the flexibility to change, and navigate through that change, as the technology evolves,” Jue says. “So, starting design exploration in a simulation environment and then combining that flexible simulation environment with a scalable sub-THz testbed for 6G research helps provide that flexibility.”

Nokia’s Shahramian agrees that this is a long process, but the goal is clear “For technology cycles, a decade is a long loop. For the complex technological systems of 6G, however, 2030 remains an aggressive goal. To meet the challenge, the development and testing tools must match the agility of the engineers striving to create the next network. The prize is significant—a fundamental change to the way we interact with devices and what we do with the technology.”

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

You may like

-

Navigating a shifting customer-engagement landscape with generative AI

-

The Download: COP28 controversy and the future of families

-

Exclusive: Ilya Sutskever, OpenAI’s chief scientist, on his hopes and fears for the future of AI

-

How heat batteries promise a cleaner future in industrial manufacturing

-

Job titles of the future: carbon accountant

-

The fight over the future of encryption, explained

Tech

The hunter-gatherer groups at the heart of a microbiome gold rush

Published

4 months agoon

12/19/2023By

Drew Simpson

The first step to finding out is to catalogue what microbes we might have lost. To get as close to ancient microbiomes as possible, microbiologists have begun studying multiple Indigenous groups. Two have received the most attention: the Yanomami of the Amazon rainforest and the Hadza, in northern Tanzania.

Researchers have made some startling discoveries already. A study by Sonnenburg and his colleagues, published in July, found that the gut microbiomes of the Hadza appear to include bugs that aren’t seen elsewhere—around 20% of the microbe genomes identified had not been recorded in a global catalogue of over 200,000 such genomes. The researchers found 8.4 million protein families in the guts of the 167 Hadza people they studied. Over half of them had not previously been identified in the human gut.

Plenty of other studies published in the last decade or so have helped build a picture of how the diets and lifestyles of hunter-gatherer societies influence the microbiome, and scientists have speculated on what this means for those living in more industrialized societies. But these revelations have come at a price.

A changing way of life

The Hadza people hunt wild animals and forage for fruit and honey. “We still live the ancient way of life, with arrows and old knives,” says Mangola, who works with the Olanakwe Community Fund to support education and economic projects for the Hadza. Hunters seek out food in the bush, which might include baboons, vervet monkeys, guinea fowl, kudu, porcupines, or dik-dik. Gatherers collect fruits, vegetables, and honey.

Mangola, who has met with multiple scientists over the years and participated in many research projects, has witnessed firsthand the impact of such research on his community. Much of it has been positive. But not all researchers act thoughtfully and ethically, he says, and some have exploited or harmed the community.

One enduring problem, says Mangola, is that scientists have tended to come and study the Hadza without properly explaining their research or their results. They arrive from Europe or the US, accompanied by guides, and collect feces, blood, hair, and other biological samples. Often, the people giving up these samples don’t know what they will be used for, says Mangola. Scientists get their results and publish them without returning to share them. “You tell the world [what you’ve discovered]—why can’t you come back to Tanzania to tell the Hadza?” asks Mangola. “It would bring meaning and excitement to the community,” he says.

Some scientists have talked about the Hadza as if they were living fossils, says Alyssa Crittenden, a nutritional anthropologist and biologist at the University of Nevada in Las Vegas, who has been studying and working with the Hadza for the last two decades.

The Hadza have been described as being “locked in time,” she adds, but characterizations like that don’t reflect reality. She has made many trips to Tanzania and seen for herself how life has changed. Tourists flock to the region. Roads have been built. Charities have helped the Hadza secure land rights. Mangola went abroad for his education: he has a law degree and a master’s from the Indigenous Peoples Law and Policy program at the University of Arizona.

Tech

The Download: a microbiome gold rush, and Eric Schmidt’s election misinformation plan

Published

4 months agoon

12/18/2023By

Drew Simpson

Over the last couple of decades, scientists have come to realize just how important the microbes that crawl all over us are to our health. But some believe our microbiomes are in crisis—casualties of an increasingly sanitized way of life. Disturbances in the collections of microbes we host have been associated with a whole host of diseases, ranging from arthritis to Alzheimer’s.

Some might not be completely gone, though. Scientists believe many might still be hiding inside the intestines of people who don’t live in the polluted, processed environment that most of the rest of us share. They’ve been studying the feces of people like the Yanomami, an Indigenous group in the Amazon, who appear to still have some of the microbes that other people have lost.

But there is a major catch: we don’t know whether those in hunter-gatherer societies really do have “healthier” microbiomes—and if they do, whether the benefits could be shared with others. At the same time, members of the communities being studied are concerned about the risk of what’s called biopiracy—taking natural resources from poorer countries for the benefit of wealthier ones. Read the full story.

—Jessica Hamzelou

Eric Schmidt has a 6-point plan for fighting election misinformation

—by Eric Schmidt, formerly the CEO of Google, and current cofounder of philanthropic initiative Schmidt Futures

The coming year will be one of seismic political shifts. Over 4 billion people will head to the polls in countries including the United States, Taiwan, India, and Indonesia, making 2024 the biggest election year in history.

Tech

Navigating a shifting customer-engagement landscape with generative AI

Published

4 months agoon

12/18/2023By

Drew Simpson

A strategic imperative

Generative AI’s ability to harness customer data in a highly sophisticated manner means enterprises are accelerating plans to invest in and leverage the technology’s capabilities. In a study titled “The Future of Enterprise Data & AI,” Corinium Intelligence and WNS Triange surveyed 100 global C-suite leaders and decision-makers specializing in AI, analytics, and data. Seventy-six percent of the respondents said that their organizations are already using or planning to use generative AI.

According to McKinsey, while generative AI will affect most business functions, “four of them will likely account for 75% of the total annual value it can deliver.” Among these are marketing and sales and customer operations. Yet, despite the technology’s benefits, many leaders are unsure about the right approach to take and mindful of the risks associated with large investments.

Mapping out a generative AI pathway

One of the first challenges organizations need to overcome is senior leadership alignment. “You need the necessary strategy; you need the ability to have the necessary buy-in of people,” says Ayer. “You need to make sure that you’ve got the right use case and business case for each one of them.” In other words, a clearly defined roadmap and precise business objectives are as crucial as understanding whether a process is amenable to the use of generative AI.

The implementation of a generative AI strategy can take time. According to Ayer, business leaders should maintain a realistic perspective on the duration required for formulating a strategy, conduct necessary training across various teams and functions, and identify the areas of value addition. And for any generative AI deployment to work seamlessly, the right data ecosystems must be in place.

Ayer cites WNS Triange’s collaboration with an insurer to create a claims process by leveraging generative AI. Thanks to the new technology, the insurer can immediately assess the severity of a vehicle’s damage from an accident and make a claims recommendation based on the unstructured data provided by the client. “Because this can be immediately assessed by a surveyor and they can reach a recommendation quickly, this instantly improves the insurer’s ability to satisfy their policyholders and reduce the claims processing time,” Ayer explains.

All that, however, would not be possible without data on past claims history, repair costs, transaction data, and other necessary data sets to extract clear value from generative AI analysis. “Be very clear about data sufficiency. Don’t jump into a program where eventually you realize you don’t have the necessary data,” Ayer says.

The benefits of third-party experience

Enterprises are increasingly aware that they must embrace generative AI, but knowing where to begin is another thing. “You start off wanting to make sure you don’t repeat mistakes other people have made,” says Ayer. An external provider can help organizations avoid those mistakes and leverage best practices and frameworks for testing and defining explainability and benchmarks for return on investment (ROI).

Using pre-built solutions by external partners can expedite time to market and increase a generative AI program’s value. These solutions can harness pre-built industry-specific generative AI platforms to accelerate deployment. “Generative AI programs can be extremely complicated,” Ayer points out. “There are a lot of infrastructure requirements, touch points with customers, and internal regulations. Organizations will also have to consider using pre-built solutions to accelerate speed to value. Third-party service providers bring the expertise of having an integrated approach to all these elements.”